Have you ever wanted to recreate a look or color palette from a favorite photo? Or have you ever wondered what it was like to turn a day scene into a night scene? Most professionals will put together reference images to give ourselves and our clients a better idea of what we would like our end result to look like. Reference and mood boards serve as a means to convey our vision and style direction to our clients.

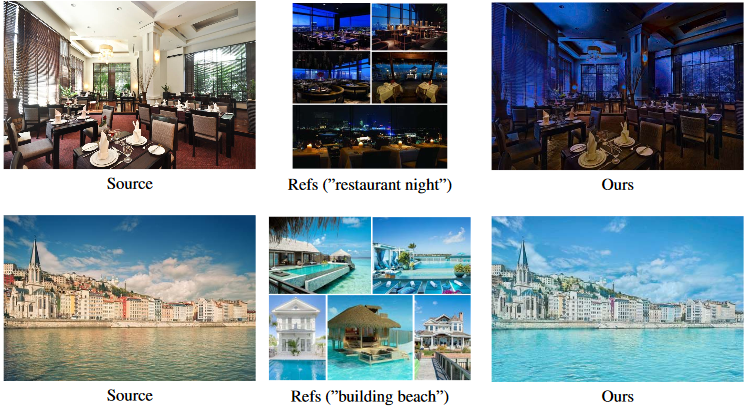

Researchers from Microsoft and the Hong Kong University of Science and Technology are using deep learning methods to apply the colors and styles from a series of reference images to a single source image called “Style Transfer from multiple reference images.”

Examples of the Style Transfer From Multiple Reference Images in action

Essentially, the images should be of the same type of scene containing elements of the same classes, e.g. cityscape scenes featuring buildings, streets, trees, and cars. We aim to achieve precise local color transfer from semantically similar references, which is essential to automatic and accurate image editing, such as makeup transfer and creating timelapses from images.

You can download the Neural Color Transfer between Images research paper directly from here:

While still in development, there is undoubtedly a strong possibility this could be a game changer for colorists and retouchers in the future. In the meantime, we can still use our tried and true color grading methods to bring out the best results of our images.

If you haven’t already, be sure to check Daniel Meadows’ post on Color Grading Like a Professional and also Michael Woloszynowicz’s Color Grade Video Course.

Source: NVIDIA | Image Source: Pexels